After being completely buried under end-of-year admin for a few weeks, it's great to be back to work on this project. I've been working on plumbing in the latest dataset from the Archives, which has doubled in size to around 57,500 series. In an attempt to create a browsable overview of the whole collection, I have been developing the earlier grid sketches, feeding in more data, and extra parameters. Also new in this dataset are two interesting features of archival series: items - number of catalogued items in the series - and shelf metres - the amount of physical space the series occupies. In this interactive browser, you can navigate around the whole collection, and switch between modes that display these parameters.

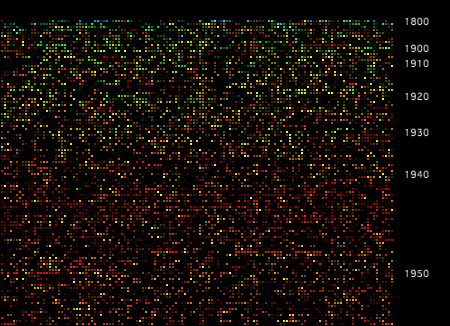

A brief explanation. Like the earier grid, series are sorted by start date (still contents start date, rather than accumulation, for the moment) then simply layed out from top left to bottom right. In this version I've added some year labels on the Y axis, which show the distribution of the series through time. Hue is mapped directly to date span: red series have a short date span, blue have a long span. The four modes in this interactive change the mapping for brightness. In the default display brightness is mapped to items (I); M switches the brightness key to shelf metres; P shows items per shelf metre; and S switches the brightness key off (showing span/hue only).

Both these new parameters have a wide range and a very uneven distribution, and as you can see in the visualisation there are many series with zero items and/or zero metres. In fact around 30000 series (over half this collection) have zero digitised items; while around 2600 have between 100 and 1000 items, and 13 have more than 10000 items. Around 20000 series have zero shelf metres, around the same number have 0.1-1m, around 10000 have between 1m and 10m, and the rest have more than 10m - with a couple of dozen series with more than 1km of shelf space! It's important to remember, as Archives staff have mentioned to me, that items here refers to digitised items. Series with zero listed items aren't empty, they just haven't been digitised. Similarly I suspect that a value of zero shelf metres suggests that the data doesn't exist. Even if it can't be taken at face value, items is an interesting metric because the Archives digitises records largely on the basis of demand from users; so a series that is frequently requested is more likely to be digitised. Items, then, is partly a measure of how interesting a series is, to Archives users.

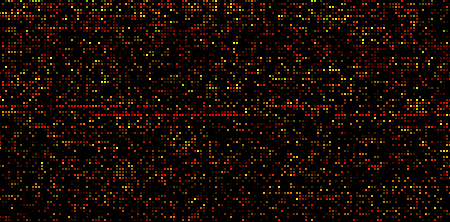

The items view of the grid allows us to see, for example, that there are more digitised items in series commencing in the 20s and 30s, than there are in series commencing in the 60s and 70s. We can also see a dense band of well-digitised series from the late 90s onwards. I don't know, but I'd suspect that these are "born digital" records - no digitisation required. The most striking feature of the items graph is the narrow red streaks around 1950: these are Displaced Persons records from 1948-52, each series corresponding to a single incoming ship (above). These records show up here because they are well digitised (interesting) but also because there are many sequential series forming visual groups. There are other pockets of "interestingness", but they are less obvious. This reveals one drawback of this grid layout, which is that related series are not necessarily grouped together. I'm hoping to address this when I start looking at agencies, functions, and links between series.

A few technical notes. After running into problems storing data in plain text, I changed the code to read the source XML in, pick out certain fields or elements, and write the data back out as XML. I used Christian Riekoff's ProXML library for Processing, which makes the file writing part very easy (Processing's built-in XML functions don't include a file writer). This worked well, except when it came to exporting web applets, which just refused to load. Rummaging around in the console log, and turning on Java's debugging tools (thanks Sam) showed that the applet was running out of memory while trying to load the XML - admittedly a fairly hefty 27Mb uncompressed. So for the web version at least, I have reverted to storing the data as plain text, which immediately reduced file size and loading time by a factor of 4, and solved the applet problem. Since then Dan and Toxi have suggested alternative ways of handling the XML, such as SAX, which streams the data in and generates events on the fly, rather than loading the whole XML tree into memory before parsing it. I'll be looking into that for any serious web implementation of this stuff.

Finally, with almost 60000 objects on the screen, this visualisation raises some basic computation and design issues. Even using accelerated OpenGL, this is a tall order; I found I was getting around one frame per second on a moderately powerful computer. I have solved the issue here with a simple workaround (thanks Geoff for this one) - pre-render an image of the grid, then overlay the interactive elements. Performance issue solved. But there are some limitations: this approach means the grid layout is fixed. It's a significant move away from a truly "dynamic" visualisation, where all the elements are drawn on the fly. For visualisations at this scale, I don't think there's any other way, but as the design develops I'll be trying to push back towards the live, dynamic approach, as the dataset permits.

Labels: documentation, grid, interactive, sketch, xml